Listen to the article: 11 minutes.

Made with Adobe Firefly

Made with Adobe Firefly

*This article discusses the UX and the world-building aspects of Memory Room. For the article about the content, click here.

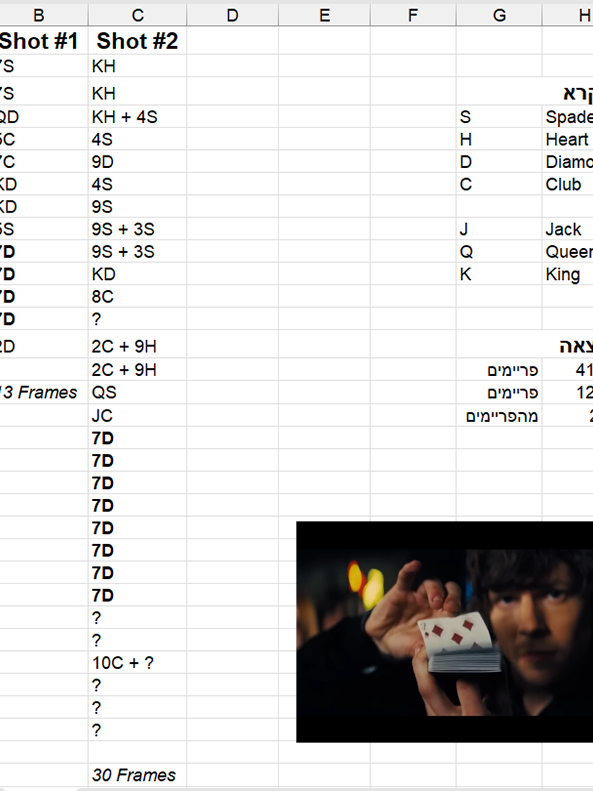

Different objects act differently. We hold certain expectations toward lighters, for instance. We are aware of their abilities, their ways of being used and they are different from our expectations regarding canvas photos, CDs and books. Any virtual reality experience that has interactive objects must address these expectations, driven by our experiences with these daily objects.

I believe that an innovative experience is one that has unique ways to let us interact with the environment. We can use one or more of the sensors and input systems to make the players curious about the plot or messages. In my project "Memory Room", I've used several input systems to let the visitors be involved. This page will cover some of the interactive objects I put in the experience.

1. Candle – proximity and microphone.

When we see a candle in a space dedicated to someone, we usually look for matches or a lighter. I think that it is not just a pyromaniac urge- it is a way to participate without saying a word. So, there's no wonder that people would see a candle and search for a lighter to grab and then use. Then we ask ourselves "how do I light a candle in real life?". It's very simple, we press and hold the trigger of the lighter, usually with our thumb, and get the flame close to the wick.

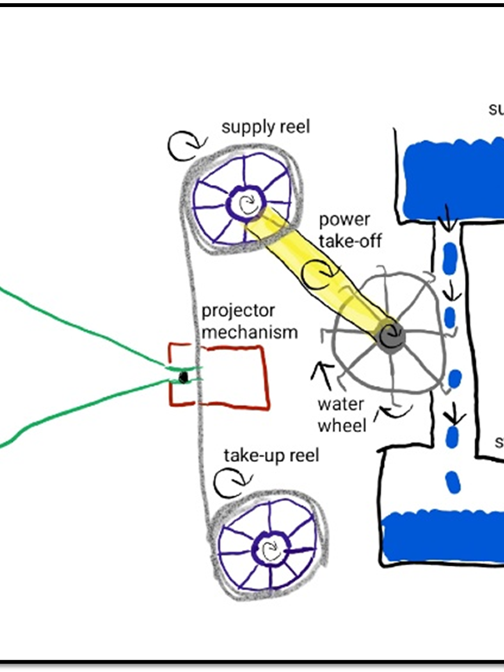

If the virtual lighter has physical boundaries ("collider") we can grab it; if both the flame and the wick have colliders- by making them touch each other we can activate the flame object and add a little light at the base.

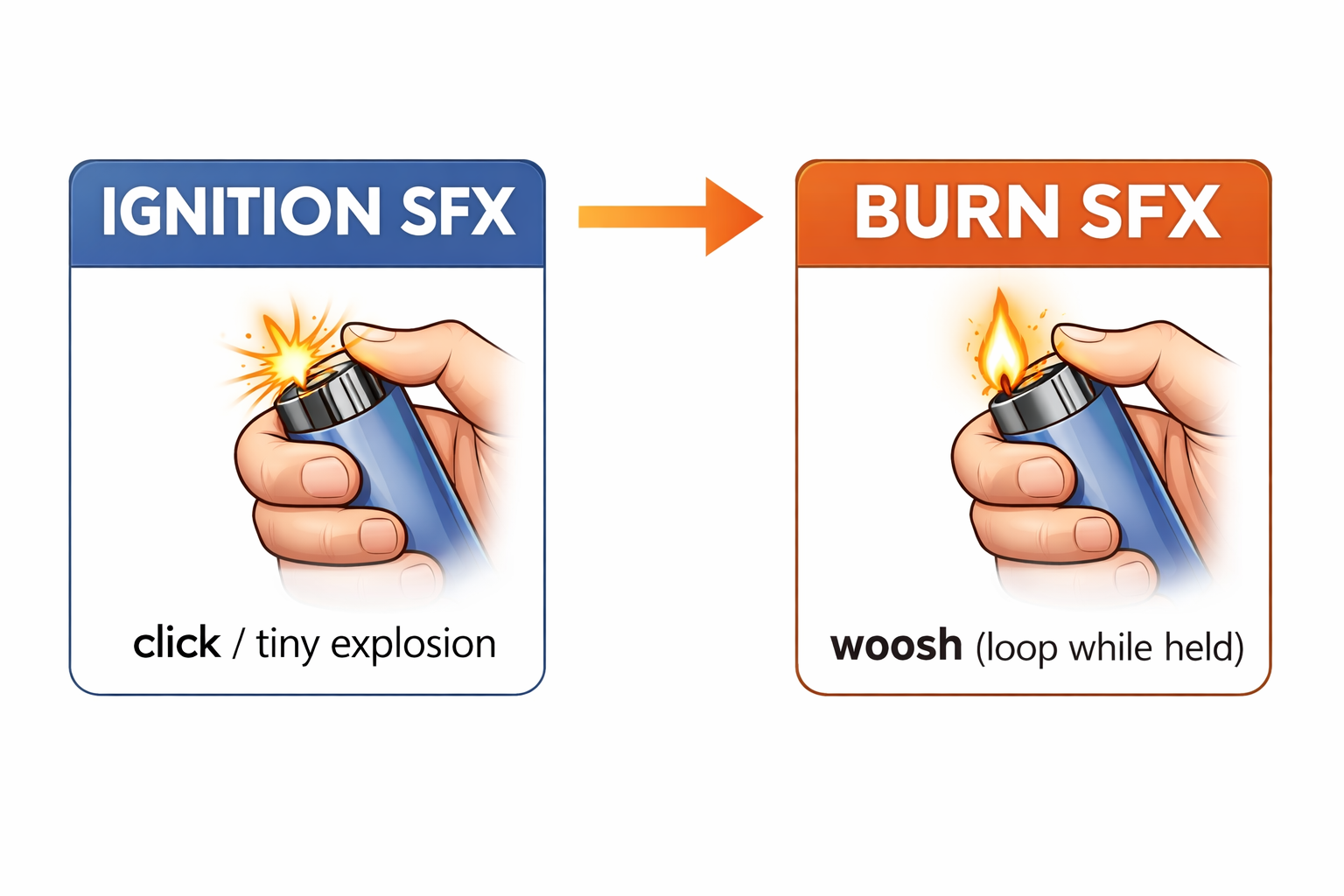

The sound of a lighter is also something to consider- every lighter trigger produces a clicking sound that will happen at the beginning of every ignition (sounds like a tiny explosion) followed by a "woosh" of the burn. As long as we hold the trigger, this sound will play. This audio detail requires a unique script differentiating the ignition and burn sound effects.

Lastly, extinguishing the candle. "How do we do it in real life?" is the key question that guided me to the following solution- if we are close enough and make a sound loud enough (at least like a blow of air) the flame's object will be disabled. VR headsets nowadays contain microphones that can detect volume. With a more complex script we can detect not only proximity and loudness but also type of sounds- to exclude regular talking and to prioritize exhalation.

ignition + burn (per click)

burn (while keeping pressing)

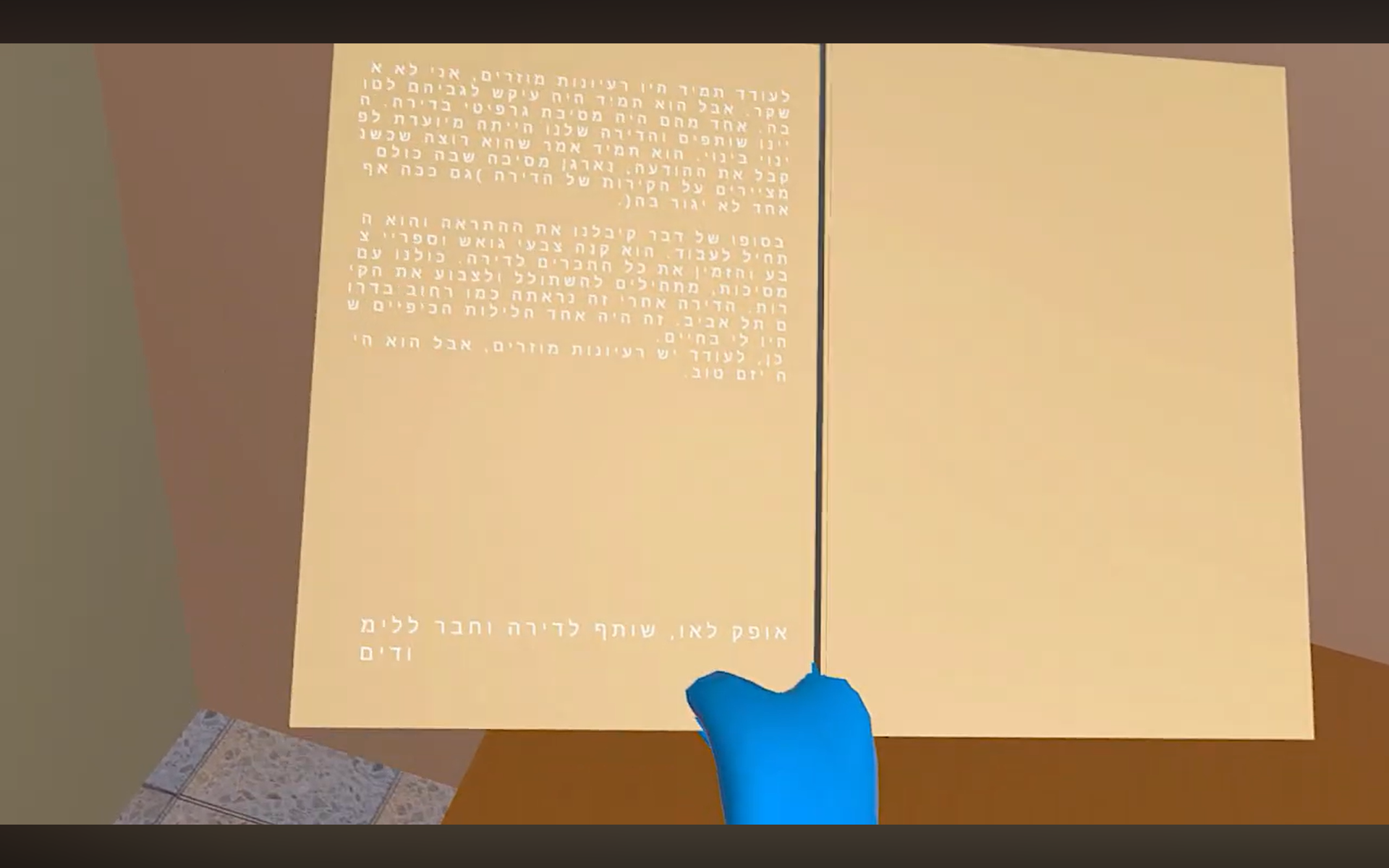

2. Notes, will and others – grab to change environment

Physical objects can trigger our memories. By seeing a bottle of wine, we can remember the moment we bought it or imagine a picture of a vineyard. While in reality that transformation is only visible to the person experiencing it, who remains in the same space, in VR it is highly possible to appear in a new place by grabbing an object. That "grab" is just one simple way to start the function that will change something. The right questions should be:

- "What action should start the transformation?"

- "Why this object?"

- "What kind of transformation?"

Let me give you a breakdown from the project. We visit the most private place a person has to offer- his bedroom where his creations are. The player grabs a note from a wall of personal quotes and poems, inspects it, grabs two more and then moves to another environment. That environment is about a fragile ego- More creations, more doubt about success and more symbols of loneliness and failure. The fact that the player took down the notes from the wall, read it briefly and then moved to another note has a lot to do with the way my ego is shown in the other environment; all my life people watched my art for a second, and continued. Therefore, grabbing is a great and simple action to start the transformation, specifically by grabbing not one but three notes. By then, people start pulling notes just for fun. That collection of notes is the answer to question 2- from creation to inner world. These notes are windows to my world. Regarding the transformation itself, when we grab the third note, we pop into that environment while still grabbing the note, that serves as a clue to action that made us appear here. Even if we release it, we remain there.

In other transformations I considered, only if we keep the object in our hands, we see the other environment. When we let go- we go back to the original space we were in.

3. Shirts- grab to replace with a similar object

Generally, grabbing is probably the most basic trigger action possible in VR, like tapping on a touchscreen (probably because the script is relatively easy). Yet, it can also be useful for swapping similar objects; The player grabs a folded shirt and an unfolded one is taking its place in our hands. That wrinkled shirt can represent that the player is allowed to do many things in the room, but not to organize everything back. It is a lesson for us that although a virtual space can be reset in the press of a button, our journey inside cannot. Our decision to disrupt is a valid option that has consequences.

4. Life story and photos – grab to play

One last thing about the grab action. Grabbing in VR is mostly about the visual sense- we grab and see a change. In reality, it is more about the sense of touch- we feel the material, its temperature, etc. usually to mimic that, there is a small vibration when we collide with objects.

But grab can also affect the sense of hearing. When we grab an object that contains a lot of text, it is commonly desired that we will hear a voice reading it. We might want to hear it several times, or the voice starts over every time that we grab the object (if we've missed something). It is important that no other dialog will interrupt us from hearing that dialog, that may be useful for the plot.

In addition, in some cases, a video or sound playing when grabbed seems like magic, as it doesn't work that way in the physical world. Some of the nice things that alternative reality has to offer.

In the project, there are several items that are played when grabbed. If we grab the official life story document written by the Ministry of Defense, we can hear the voice of radio host Dan Kaner reading it. As long as we hold the document, he continues. When we pick up the object again, we will hear the document from the top. If the visitor wants to get to the end of the document, they need to slow down and to hear the whole story. Anyone who seeks to rush things up will miss most of the experience.

When we get to the photos from my mandatory service, we can look at them. When grabbed, that man with the guitar will start strumming. By the way, you will not hear him singing, although the original video does have that, due to the concept of gradual exposure to the character of Oded- there is always something missing at any point until the very end of the experience (much like the shark in Jaws).

5. Visitors' book – QR code in VR

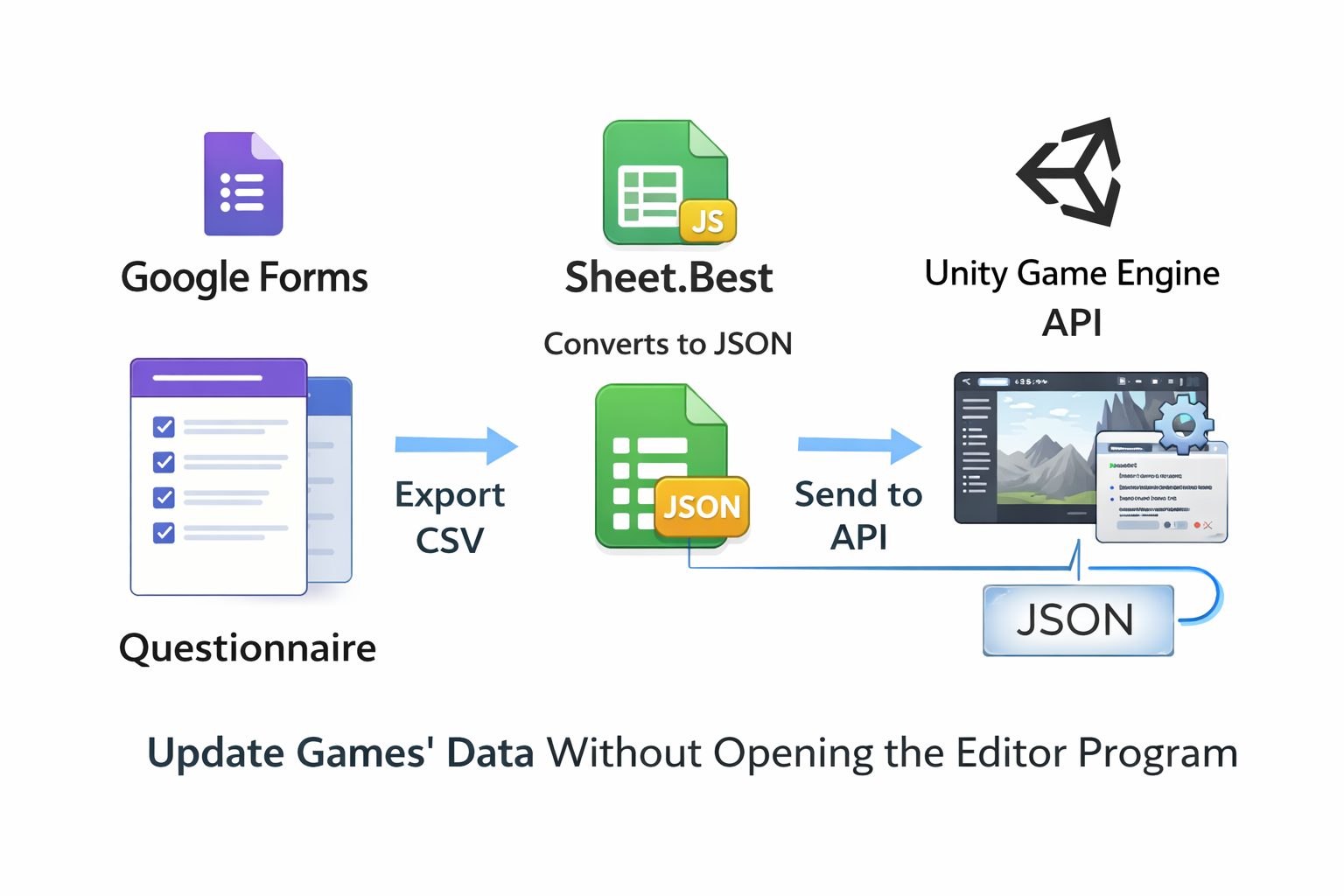

While finalizing the project I realized that it will be part of exhibitions, meaning that when one person visits the room, others will watch it on a screen. I wanted to give them something to do as well; if they will unlock their phones and scan the QR code on the cover of the Visitors' book, they will see a Google form that invites them to share a memory regarding Oded. After sending their answer and reloading the game, the answer will appear as a new page in the book.

This makes the experience unique by expanding the possibilities of interaction for the visitors. It also lets them be part of the creation as collaborators. This is, of course, only one of many options to include QRs and other second screens to let other people be involved in the experience.

The pipeline for making this function includes creating a questionnaire in Google forms as a CSV file, converted by Sheet.Best to JSON file, which can be used in an API inside Unity Game Engine. Using this technology, it is possible to update games' data without opening the editor program.

6. Yellow balls – speak to shoot

Besides detecting volume levels for disabling objects, microphones can spawn them! For exemplifying the effect of speaking in the environment dedicated to ego and creation, I let the players shoot yellow little balls from their mouth. I had to decide what the balls would look like and how fast they fly forward, then generate the script that will spawn them every time that we spoke.

I must admit a few things-

1. Some testers didn't understand that their voice was the action that spawned the particles.

2. After understanding, they spoke just to shoot the particles around, looking for a reaction from the environment.

3. Knowing that testers will eventually try to shoot the particles around, I assigned few objects to react to the collision of the mouth-particles – stars were disappearing and statues were pushed away.

4. When pitching this idea of interaction, I was recommended to provide a question which the players will have to see immediately that will provoke them to speak.

Although it was partly misunderstood and maybe not well-designed, I think that using voice as an input to shoot particles can be interesting in shooting games or adventure games for many uses.

Building my bedroom as a virtual environment was quite interesting for me. I sat for hours in my actual bedroom measuring things, trying to make the composition right. I wanted to make it as accurate as possible. Then I began taking pictures- the floor-tile, the covers of books and CDs, the color of walls, the outer view from the window, the color of the red box… while copying the notes I used Photoshop to copy the locations of the pinholes of each paper and aligned the virtual pins with the holes. I got the generous help of Iris Fainberg, a brilliant graphic designer, to have all the covers of the CDs; I used Meshy.AI and Tripo to make the characters from pictures (Oded) and description alone (Ofra).